7 steps to conducting a technical SEO audit

Nikki Halliwell Senior Technical SEO Manager

9 min read

Friday, 11th March 2022

Have you been auditing your websites correctly? At the end of the day, there’s no right or wrong way for an audit to look, but here are 7 steps to take when conducting a technical SEO audit.

1. Gather basic technical information

Do a manual review of the website

The first step in auditing a website is to manually review it. This means to click through the website as if you were a user trying to complete the primary goals of the website.

While doing this, take note of anything that draws your attention or that you find unusual - you can also note things that you think are being done well.

Use crawling and auditing tools

Run the website through your crawling and auditing tools of choice. Rise at Seven uses Screaming Frog and Sitebulb, but you can also use OnCrawl, JetOctopus, DeepCrawl and others. For the purposes of this article, we will be using Screaming Frog.

Auditing tools include Semrush and Ahrefs.

2. Data from analytics tools

Check Google Search Console Reports

Google Search Console (GSC) is a powerful tool that includes many different reports.

The Performance report gives an overview of performance in search engine results pages (SERPs). This includes clicks, impressions, average position and average click-through rate.

The Coverage report shows which pages are indexed and are available to appear in Google, and which pages are currently excluded from SERPs - and why.

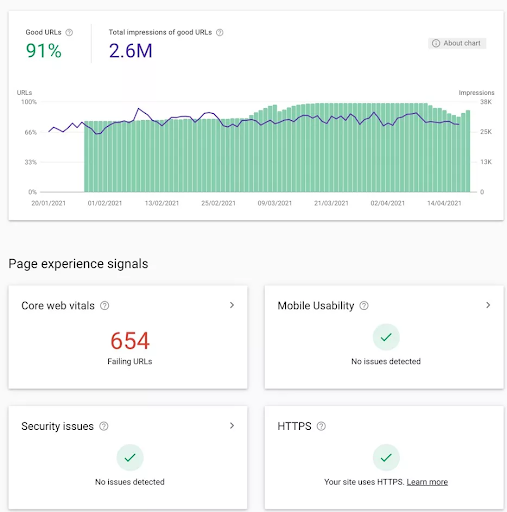

The Page Experience report displays whether or not the website meets Google’s page experience signals. In here, you can see the Page Experience, Core Web Vitals, and Mobile Usability reports.

Check Google Analytics and Look for Trends in the Data

In Google Analytics, go to Audience > Overview, set the date range to the last 12 months and download the data. Then look at how the website acquires traffic by going to Acquisition > All Traffic > Channels, set the same date range and download the data.

Once gathered, spend some time analysing this to spot any trends or anything else of note.

Third-party tools

To gather additional data, I use:

- Semrush to get visibility data - Information to gather includes Authority Score, Organic Search Traffic, Traffic Trend, Keywords Trend, SERP Features, Top Organic Keywords, and Organic Position Distribution.

Ahrefs to check visibility - Information to gather includes URL Rating, Domain Rating, Organic keywords, Organic traffic, Organic positions, and Top pages.

3. Backlink profile

Number of backlinks

Using tools such as Ahrefs, you can find the number of backlinks that are linking back to the website. You can export this list for future use and analysis.

Number of referring domains

Staying within Ahrefs, you can find the number of domains that provide links back to the website you are auditing. As before, you can export this list of domains for further analysis.

4. Indexing, crawling and rendering

Check how many pages are indexed

Contrary to popular belief, the site:search operator does not show the number of URLs in Google’s index. Instead, it shows how many pages Google is aware of - so there may be pages here that they have crawled but not yet indexed, or there could be pages that have not yet been removed from the index. The only definitive way to see how many pages are indexed is to use the Coverage report in GSC.

Check to see if the number of valid indexable pages in GSC corresponds to the number of indexable pages with a 200 status code from your crawl.

Review the robots.txt

The robots.txt file tells search engines which pages they can access. This is important, as blocking access from certain pages is essential for both the privacy and security of a website. Some common pages that make sense to disallow include any duplicate pages, thank you pages, checkout pages, and customer accounts etc.

Check that the necessary rules are included in the file, and ensure it includes a link to the XML sitemap. The robots.txt URL should sit at the root as per Google documentation: https://developers.google.com/search/docs/advanced/sitemaps/build-sitemap.

Review use of robots meta tags

Robots meta tags indicate to search engines whether a page on a website should be indexed or not. Check to see if any robots meta tags accidentally block resources you feel should be indexed.

Conversely, you should check to see if there are any resources that should be blocked through a robots meta tag such as login pages, thank you pages, and internal search results etc.

Do pages on the website render correctly?

Rendering is the process of how search engines view and interpret pages. Understanding how they view a website can help you identify why a certain page is not performing as well as expected. The Mobile-Friendly Test will render a page and show you the resulting HTML.

You can set up Screaming Frog to render JavaScript by going to Configuration > Spider > Rendering before starting your crawl and then Choose JavaScript and Googlebot Mobile.

Review the XML sitemap

An XML sitemap should contain all of the URLs for indexable pages with a 200-status code.

In Screaming Frog, you can see if any non-indexable pages are included under Overview > Sitemaps > Non-Indexable URLs in Sitemap. However, to do this effectively, you need to select ‘Crawl Linked XML Sitemaps’ under Configuration > Spider > Crawl before running your crawl.

Site Speed

Page Speed Insights and the Page Experience report in GSC can be used to test site speed.

GTMetrix is another of my personal favourites and is useful for seeing more actionable tips and identifying any specific problems.

WebPageTest is also great for getting minute details about your page speed issues and allows you to test on mobile devices from multiple locations.

5. Internal linking

Internal Linking Utilisation

Internal linking is incredibly important and how well it is used can either make or break a website. By providing search engines with links and descriptive anchor text, it indicates which pages you consider the most important and helps them determine their topic/s.

Breadcrumbs are also a very effective way to improve internal linking. They make navigation easier as well as encouraging users to visit more pages of a website.

When auditing, you should check to see if the internal linking makes sense, or if improvements can be made. You should also see if Breadcrumbs have been added across the entire website.

Are canonical links present and used correctly?

All web pages on the website should have a canonical link element. To check for missing canonical links in Screaming Frog, go to Overview > Canonicals > Missing.

You should check that any canonical links are sensible or whether they point to URLs that would unintentionally noindex the current page. Self-referencing canonicals are fine for most URLs.

Structured Data Utilisation

Google uses structured data to better understand the content of the web page and to enable the use of special search features and enhancements (known as rich results).

To check if the structured data is valid, you can use the Google Structured Data Testing Tool or use the Rich Results Test to check if the web page is eligible for rich results.

6. Redirects and status codes

Server errors

Server errors are those that return a 5xx status code, there are a few types of server errors, but the most common are 500 (Internal Server Error), or 503 (Maintenance).

A large number of 5xx status codes may indicate that there are some problems with the server. It could be that the server is overloaded or may need some additional configuration.

404s

It is natural for a website to have some 404s. It’s also important that a website can generate a 404 status code. Try navigating to nonsense or test URLs and ensure you’re met with a 404 error.

These types of Not Found errors are a natural part of the web, as they tell users that the information they’re requesting is not available. If users are redirected to the homepage, for example, users will be confused, which is a poor user experience. What’s more, sending Google to your homepage instead could make them believe your homepage is a soft 404.

Similarly, having lots of pages that return 404s, or even 410 (Gone) status codes, will also lead to a bad UX.

Look out for Redirect Chains

This is when URLs redirect from A > B > C etc. Google will not follow any more than 5 redirects in a chain so any URLs at this point in the chain could remain undiscovered. A URL should only be redirected once and any chains should be reduced where possible, e.g A > C, B > C etc.

Find redirect chains under Reports > Redirects > Redirect Chains.

Check if there are redirects with incorrect statuses

In most cases, the website you are auditing should use 301 (permanent) redirects. You can use 302 (temporary) redirects to indicate a temporary change, such as the need to log in to access an area, e.g. customer account or paywalled content.

Make sure the website uses redirects in line with its purpose.

7. Content, headings and keywords

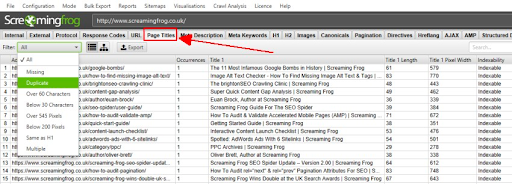

Review Page Titles

Page titles provide search engines and users with valuable information about the page. All pages should have <title> tags and be unique.

It is best practice to keep page titles within 30 to 60 characters to make the best use of available space and to reduce the risk of being truncated in SERPs.

To check the title titles in Overview > Page Titles. To quickly locate any missing page titles, simply go to Overview > Page Titles > Missing.

Review Meta Descriptions

The more descriptive, attractive, and straight to the point a meta description is, the more likely users will be to click on the result.

Where a description is missing, Google can generate its own - what's more, Google may rewrite the description even when a unique description is used. Despite this, it’s still best practice to write unique descriptions for your content.

To check meta descriptions in Screaming Frog, go to Overview > Meta Descriptions.

It is recommended to keep descriptions between 70-155 characters to make the best use of available space and to reduce the risk of being truncated in SERPs.

Use of Headings

It is recommended that every web page of a website has one H1 header. H1 headers should contain the target keyword to communicate the topic to both users and search engines.

To check H1 headings in Screaming Frog, go to Overview > H1 > Missing. A page without a H1 is missing a significant opportunity to provide search engines with valuable information.

Summary

And there we have it, 7 Steps to conducting a technical SEO audit that others will envy. I hope you find this article helpful and if you have any questions feel free to contact me on Twitter and get in touch with Rise at Seven to find out more about our SEO services.