250k+ links and no disavow file – here’s what happened when we uploaded one

During a Google Webmaster Central hangout in August 2018, Google’s John Mueller suggested that the links shown in Search Console are “the ones that are worth focusing on” and enough to compile a disavow file. I wanted to put this to the test.

Recently I was working with two websites with a combined 260k links; literally tens of millions of sessions per year; and no disavow files (if I had tens of very similar websites I'd have done a much more scientific experiment with control groups...but I didn't so this is what you've got. Hopefully you'll agree it was worthwhile...)

Neither website has ever been subject to a manual action and, as far as I’m aware, had never been affected by the Penguin algorithm either. In the hangout John said that “sometimes if you’re aware of link building that was done in a significant way then the Search Console links can give you an idea of the general pattern, but then it’s still worth looking to see if there are more links that you can also include there”. There had been almost no link building activity on either site since the company parted ways with its last SEO agency. So, the history of both websites is very similar, except that one site (mostly naturally) had a much larger link profile than the other.

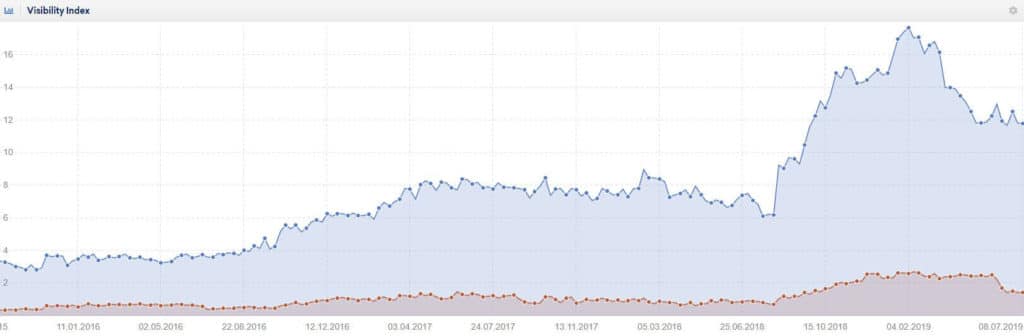

You can see that the sites tended to benefit from and suffer from the same updates. They use the same technology; the site structure is identical; the pages are unique but the templates are the same.

What we did

The backlink profiles from both sites were exported from Google Search Console.

We audited the link profile of site #1 inside the exported CSV file. This means we had no details on:

- Anchor text

- Whether the links were rel=”nofollow”

- The target page

- Links to the referring page

- Whether any third-party tools thought the links were any good or not

…only the link URL and the date it was last crawled.

From the CSV file we compiled a disavow file, focusing on links that:

- Used non-brand anchor text in a way that didn’t look natural

- Were on scraper sites

- Were guest posts (not just labelled as guest posts – we actively disavowed most links that looked like guest posts)

What makes a bad link?

Some examples of the types of links we disavowed:

Our friend Paul Madden very generously gave us a Kerboo license to help us audit site #2’s link profile. We connected Google Search Console and Ahrefs (and uploaded an export from Open Site Explorer) – without accounting for duplicates, Search Console showed us 28% of the number of links

Auditing the link profile of site #2 within Kerboo meant that we had access to a lot more information about each link, so the much larger profile was actually quicker to go through than the smaller one: Kerboo spends a few hours crawling the links found in Search Console, adding context into the platform. We looked for the exact same things and didn’t pay too much attention to the Link Risk and Link Value scores (they helped us to find bad sites with a lot of links in Analysis mode - we didn’t even notice them in Investigate mode).

After auditing site 1, we decided to add 19.35% of the link profile (we found) to the disavow file.

After auditing site 2, we decided to add 24.32% of the link profile to the disavow file.

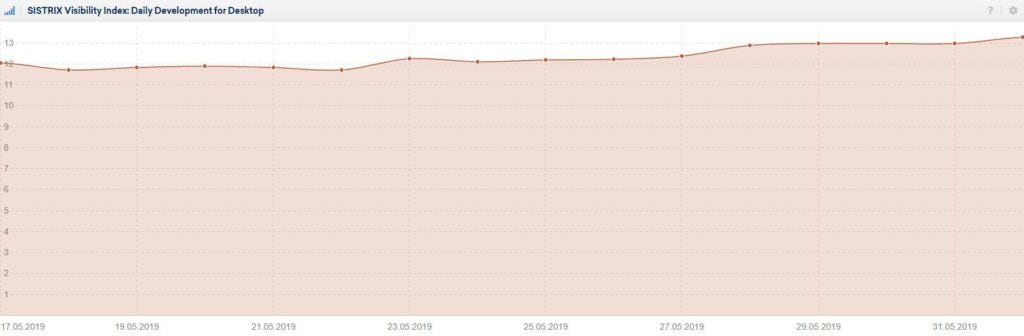

Both disavow files were uploaded on 17th May. We annotated the date in Google Analytics. Nothing substantial changed on-site over the next 2 weeks and almost no new links were acquired.

What happened

Site #1, where we audited only the links highlighted in Google Search Console, saw a visibility drop of 9.12% (SISTRIX numbers) around 10 days later.

Site #2, where we audited the entire backlink profile shown by Search Console, Ahrefs and Moz, saw an increase of 13.23% in the same time frame.

Google Organic traffic was fairly reflective of the same trend, although it's far from conclusive because a few days later Google rolled out its pre-announced June "core algorithm update". Site #2 returned to roughly the same visibility levels (an overall decrease of 0.33%) whereas site #1 lost 37.45% of its visibility (both measured at the point where Google announced the roll out of the update was complete). We'll obviously never know whether the disavow files had anything to do with the end results.

Not exactly a conclusion

...but what I'd take from this "experiment":

- It's probably worth looking at more than just Search Console data, even if it's for your own ease of auditing the profile

- A site with a cleaner and bigger link profile survived the core algorithm update and a site built on the same technology, with the exact same structure (but unique content) and a smaller, slightly less clean link profile got a kicking

- I think it's worth uploading a disavow file, still, but I don't think it's worth spending more than a day doing it unless you've had link issues in the past

- The daily visibility updates from SISTRIX are VERY useful

- Paul Madden is a very nice man.